Clustering is an unsupervised learning technique utilized in machine learning and information mining. It includes gathering information focuses or objects into clusters based on their likenesses, with the objective of maximizing intra-cluster similitude and minimizing inter-cluster likeness. Clustering is broadly utilized in different areas such as pattern recognition, picture analysis, marketing, and bioinformatics.

The most objective of clustering is to discover hidden designs or structures inside a dataset without any prior knowledge almost the information. It makes a difference to distinguish connections between information focuses and uncover fundamental patterns which will not be clear at first look. This makes it a capable device for exploratory information investigation and picking up profitable bits of knowledge from huge datasets.

Presentation to Clustering

There are a few sorts of clustering calculations, counting k-means, various leveled clustering, density-based clustering, and fluffy c-means. Each calculation has its possess qualities and shortcomings and is appropriate for distinctive sorts of datasets.

One prevalent approach to clustering is k-means, which points to segment a given dataset into k clusters by minimizing the whole of squared separations between each point and its particular cluster centroid. Be that as it may, one major impediment of k-means is that it requires the number of clusters (k) to be indicated already.

This can be where X-Means comes in – an productive expansion of the conventional k-means calculation that consequently decides the ideal number of clusters in a dataset. Created by Dan Pelleg et al., X-Means employments Bayesian Information Criterion (BIC) as an objective work to assess diverse cluster arrangements based on their complexity and goodness-of-fit.

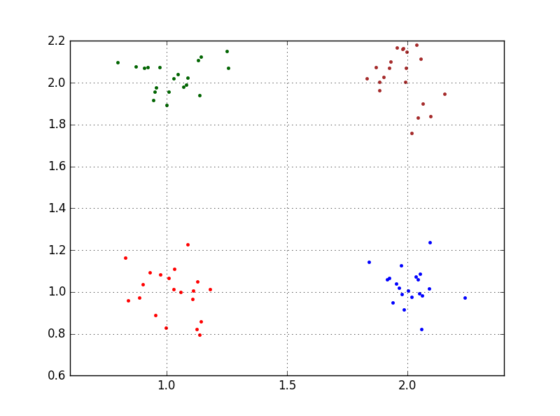

The fundamental thought behind X-Means is comparable to that of k-means – it begins by haphazardly selecting k introductory centroids from the dataset. At that point it iteratively makes strides these centroids by reassigning information focuses to their closest centroid and recalculating the centroids’ positions until meeting happens.

However, unlike traditional k-means where as it were one show with settled values for k is considered, X-Means permits for the investigation of different cluster solutions with changing numbers of clusters. It does this by recursively part the dataset into littler subclusters and assessing each arrangement using the BIC basis.

Also Read: Discovering Gelamento A Guide to the World of Frozen Elegance

What is X-Means?

X-Means may be a clustering algorithm outlined to naturally decide the ideal number of clusters in a dataset. Not at all like conventional strategies, X-Means iteratively parts and consolidates clusters based on the Bayesian Information Criterion (BIC), adjusting exactness and simplicity. It starts with an beginning figure for the number of clusters, performs K-means clustering, assesses clusters utilizing BIC, and parts clusters in the event that fundamental. Then, it merges covering clusters to move forward the in general BIC score. X-Means excels in dealing with datasets with changing densities and non-spherical shapes, making it a valuable device for tasks like customer segmentation and picture recognition.

How Does X-Means Work?

X-Means could be a clustering algorithm inferred from K-Means but with the advantage of consequently deciding the ideal number of clusters for a dataset. It starts by randomly assigning information focuses to clusters and after that iteratively splits and consolidates them until finding the most excellent number of clusters. The method includes two main steps:

- split

- merge

Within the part step, clusters are divided into smaller ones in the event that it moves forward clustering quality based on measurements like entirety of squared mistakes (SSE) and Bayesian data basis (BIC). Then again, within the combine step, littler clusters are combined into bigger ones if it decreases SSE or BIC values. This iterative prepare proceeds until a stopping criteria is met. X-Means offers a more effective and robust approach to clustering compared to conventional strategies like K-Means.

Hierarchical Clustering

Various leveled clustering is a popular and effective method for finding characteristic groupings inside a dataset. It works by iteratively blending person information focuses or clusters into bigger bunches, making a various leveled tree-like structure known as a dendrogram. This approach is particularly valuable when the number of clusters in a dataset is unknown, making it an perfect method for exploratory data analysis.

One of the key advantages of hierarchical clustering is its capacity to capture the fundamental structure of complex datasets. By visualizing the dendrogram, we will pick up experiences into how different clusters are related to each other and distinguish any potential exceptions or irregularities. Also, this method allows for flexibility in deciding cluster boundaries, as we can select where to cut the dendrogram based on our specific needs.

There are two main types of hierarchical clustering:

Agglomerative clustering starts with each information point as its possess cluster and after that combines them together until all focuses have a place to one huge cluster. Divisive clustering takes the opposite approach by beginning with all focuses in one cluster and after that part them into smaller groups until each point shapes its possess cluster.

A key choice that must be made when using various leveled clustering is choosing an fitting separate metric to degree likeness between data focuses. The foremost commonly utilized measurements incorporate Euclidean remove, Manhattan remove, and cosine closeness. Different metrics may be appropriate for distinctive sorts of information, so it’s critical to consider which one best captures the relationships between your factors.

Another vital thought is selecting an appropriate linkage criteria for blending clusters together at each emphasis. Well known linkage methods incorporate single-linkage (moreover known as closest neighbor), complete-linkage (also known as most distant neighbor), average-linkage (moreover known as UPGMA), and Ward’s strategy. Each strategy has its qualities and weaknesses, so it’s basic to get it their affect on the resulting clusters.

In spite of its viability in capturing complex relationships within datasets, traditional various leveled clustering does have a few impediments. One major downside is its computational wastefulness, as it requires calculating all pairwise distances between data points at each emphasis. This may be a critical burden for huge datasets and may result in long processing times.

To address this issue, the X-Means algorithm was created to move forward the productivity of progressive clustering by automatically determining an ideal number of clusters. By incorporating a statistical model determination approach, X-Means distinguishes the foremost suitable number of clusters for a given dataset whereas too being less computationally requesting than traditional strategies.

Conclusion

One of the key advantages of X-Means clustering is its ability to handle datasets with changing densities and non-convex shapes, which are challenging for conventional K-Means. Also, by automatically deciding the ideal number of clusters, it eliminates the need for trial and blunder in selecting k. This makes X-Means an efficient and effective instrument for discovering meaningful patterns in complex datasets.